Building on seven years of record-breaking developments, an international team of physicists, computer scientists, and network engineers led by Caltech – with partners from Michigan, Florida, Tennessee, Fermilab, Brookhaven, CERN, Brazil, Pakistan, Korea, and Estonia – set new records for sustained data transfer among storage systems during the SC08 conference in Austin, TX.

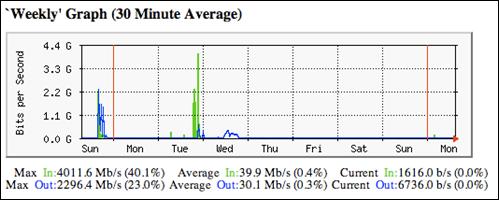

The high-energy physics (HEP) team demonstrated record-breaking storage-to-storage data transfers over wide-area networks from a single rack of servers on the exhibit floor. They achieved a bidirectional peak throughput of 114Gbps and a sustained data flow of more than 110Gbps among clusters of servers on the show floor and at Caltech, Michigan, CERN (Geneva), Fermilab (Batavia), Brazil (Rio de Janeiro, São Paulo), Korea, Estonia, and locations in the US LHCNet network in Chicago, New York, Geneva, and Amsterdam.

Following up on the previous record transfer of more than 80Gbps sustained among storage systems over continental and transoceanic distances at SC07 in Reno, NV, the team used a small fraction of the global LHC grid to sustain transfers at a total rate of 110Gbps (114Gbps peak) between the Tier1, Tier2, and Tier3 center facilities at the partners’ sites and the Tier2-scale computing and storage facility constructed by the Caltech/CACR and HEP teams within two days on the exhibit floor. The team sustained rates of more than 40Gbps in both directions for many hours (and up to 71Gbps in one direction), showing that a well-designed and configured single rack of servers is now capable of saturating the highest-speed wide-area network links in production use today, which have a capacity of 40Gbps in each direction.

The record-setting demonstration was made possible through the use of 12 10Gbps wide-area network links to SC08: National LambdaRail (6); Internet2 (3); ESnet; Pacific Wave; and the Cisco Research Wave, with onward connections provided by CENIC in California; the TransLight/StarLight link to Amsterdam; SURFnet (Netherlands) to Amsterdam and CERN; and CANARIE (Canada) to Amsterdam; as well as CENIC, Atlantic Wave and Florida LambdaRail to Gainesville and Miami; US Net to Chicago and Sunnyvale; GLORIAD and KREONet2 to Daegu in Korea; GÉANT to Estonia; and WHREN, co-operated by FIU and the Brazilian RNP and ANSP networks, to reach the Tier2 centers in Rio and São Paulo.

URL:

http://mr.caltech.edu/media/Press_Releases/PR13216.html

http://supercomputing.caltech.edu/

http://cerncourier.com/cws/article/cern/37317

Collaborators:

USA:

Caltech/Center for Advanced Computing Research (CACR); Caltech/Networking Laboratory (Netlab); U Michigan; Fermi National Accelerator Laboratory (Fermilab); Florida International University (FIU)/Center from High-Energy Physics Research and Educational Outreach (CHEPREO)

Switzerland:

CERN

Pakistan:

National University of Sciences & Technology (NUST)/School of Electrical Engineering and Computer Science

Brazil:

Rio de Janeiro State University (UERJ); University of São Paulo (USP); São Paulo State University (UNESP)/São Paulo Regional Analysis Center (SPRACE)

Estonia:

National Institute of Chemical Physics and Biophysics

Korea:

Kyungpook National University

Special thanks to: GLORIAD, TransLight/StarLight, WHREN-LILA, Internet2, National LambdaRail, ESnet, Florida LambdaRail, CENIC, AMPATH, StarLight, Pacific Wave, Academic Network of São Paulo (ANSP), National Education and Research Network of Brazil (RNP), SouthernLight, CANARIE, KREONet2, Myricom, Ciena, Data Direct Networks, QLogic, XKL, Force10, GlimmerGlass, Intel, NSF, DOE Office of Science